Title of paper under discussion

Left Hemispheric Lateralization of Brain Activity During Passive Rhythm Perception in Musicians

Authors

Charles J Limb, Stefan Kemeny, Eric B Ortigoza, Sherin Rouhani and Allen R Braun

Journal

The Anatomical Record Part A, vol 288A, pp382 – 389 (2006)

Link to Paper (free access)

Overview

Twelve musicians and twelve non-musicians listened to synthesised drum rhythms whilst lying in an fMRI (functional Magnetic Resonance Imaging) scanner. Some brain regions – across both brain hemispheres – ‘lit up’ in musicians and non-musicians alike. But musicians’ brains showed greater activity than non-musicians’ brains in the left hemisphere, especially in the language processing areas, whereas the non-musicians’ brains out-lit musicians’ brains in the right hemisphere, including some motor (movement) areas.

The authors conclude that “musical training leads to the employment of left-sided perisylvian brain areas, typically active during language comprehension, during passive rhythm perception” and that such training also teaches musicians “to dissociate incoming rhythmic input from motor responses”.

Background

Limb and his team from Maryland, US, were keen to investigate the site of rhythmic music processing in the brain. Previous studies, Limb argues, painted an inconsistent, and sometimes contradictory, picture. Some such studies “included aspects of pitch, melody, and timbre, making it difficult to isolate those neural elements responsible for rhythm alone.” Other studies “examined motor aspects of rhythm production” whereas Limb wanted to “separate perceptual and productive aspects of rhythm, as they appear to implicate different (if overlapping) neural subsystems.” Limb also looked at research that didn’t set out to differentiate the results of people with and without musical training, as well as research that only investigated “active listening” (in which participants have to engage with the rhythm to which they are listening) rather than the “passive listening” that is more common in everyday life.

Hence Limb and his team prepared an experiment in which:

- they used a simple synthesised snare drum rhythm whereby “all melodic elements were eliminated, allowing examination of the brain’s responses to rhythm alone”

- they used a rhythm that was strictly ‘quantised’ and writable in music notation, plus a ‘control’ rhythm that was random and ‘unquantised’

- the listening was passive, not active – i.e. the listener wasn’t expected to carry out a task in response to the rhythm

Method

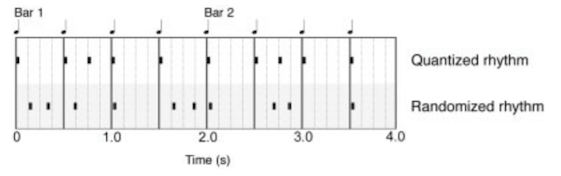

12 musicians and 12 non-musicians listened to snare drum rhythms, in 4:4 time signature at a tempo of 120 bpm, whilst in an fMRI scanner. Some of the rhythms were ‘quantised’, falling exactly on the beats and their subdivisions, and others were randomised, without any rhythmic regularity:

The fMRI images taken during the rhythm-listening were then compared and contrasted so that the scientists could see which brain areas became active 1) in both musicians and non-musicians 2) in musicians more than in non-musicians, and 3) in non-musicians more than in musicians.

Results and discussion

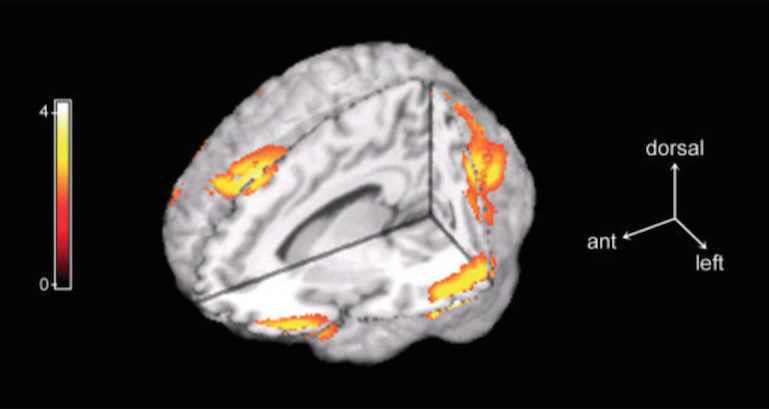

Here is a diagram of the brain areas that ‘lit up’ in both musicians and non-musicians in response to quantised rhythm:

These common areas include right and left Superior Temporal Gyrus, right Frontal Operculum, right Superior Frontal Gyrus and left Ventral Supramarginal Gyrus. These regions, writes Limb, highlight “a basic network for the processing of quantised rhythms that is activated in both musicians and nonmusicians, which may reflect an innate musical competence that is independent of training”.

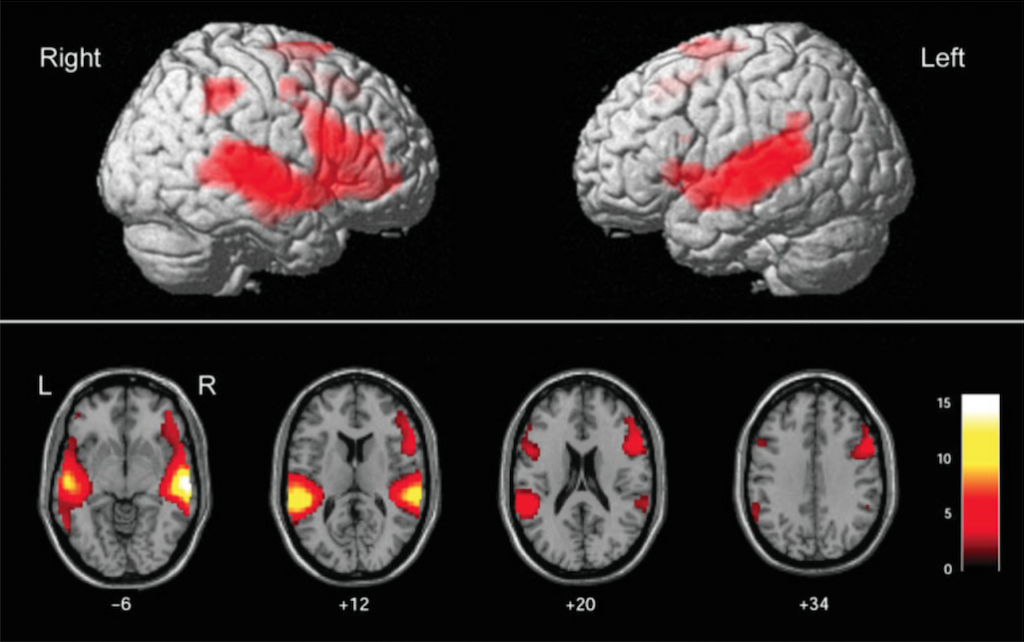

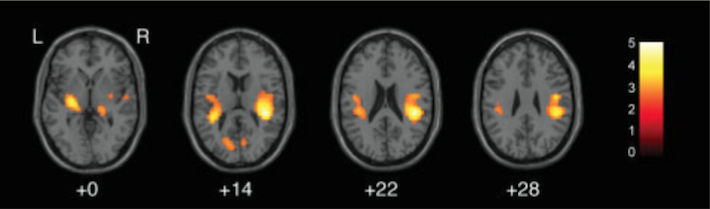

The next diagram describes those brain areas that are more active in a musician’s brain compared with a non-musician’s brain as each listens to quantised rhythms:

This contrast revealed strong activation of musicians’ brain areas associated with language processing, especially when the rhythms were quantised instead of randomised. Some activation was present on both hemispheres [left and right Anterior Middle Temporal Gyrus (MTG)] but most was present in the left hemisphere [left Frontal Operculum, left Middle MTG, left Inferior Parietal Lobule, left Superior Frontal Gyrus and left Middle Frontal Gyrus].

Limb thereby suggests that “formal music training may lead to left-lateralised activity during passive rhythm perception” and notes that this pattern of activity in a musician’s brain during rhythm perception is very similar to the activity seen during “language comprehension at the sentence and narrative levels”.

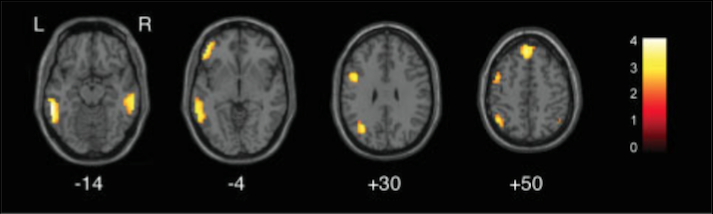

In contrast, the non-musicians tended to show more right hemispheric activity in response to rhythm:

The regions lighting up in the non-musicians’ brains included the right auditory cortex (Superior and Transverse Temporal Gyrus), together with the right Globus Pallidus and right/left Precentral Gyrus. These latter two areas are motor regions of the brain, leading Limb to suggest that these “findings imply a basic, training-independent link in nonmusicians between rhythmic auditory input and neural systems responsible for motor control, with an emphasis on right-sided neural mechanisms”.

Conclusion

Limb and his team highlight a strong contrast between the brains of musicians and non-musicians in their response to listening to rhythms. The non-musician’s brain response tends to be right-lateralised, involving motor areas; whereas the musician’s brain response tends to be left-lateralised, involving language areas. He writes that musicians “appeared to dissociate incoming rhythmic input from motor responses relative to nonmusicians and instead utilised an analytic mode of processing concentrated in the left hemisphere”.

That rhythm should elicit a motor response in non-musicians’ brains is unsurprising; even though no movement was observed, finger or foot tapping is a universal response to musical rhythm. But why, wonders Limb, does rhythm evoke activity in the language areas of musicians’ brains?

On a superficial level, he argues, rhythm is an important part of speech: “spoken languages have a readily apparent rhythmic flow that contributes to phrasing, prosody and cadence.”

But Limb suggests there may be “deeper parallels that account for a more robust relationship between language and rhythm in musicians”, reminding us that rhythm “possesses generative features much like language does”. Just as metre and phonology (speech sounds) combine in many permutations to effect meaning in language, so too do notes and rhythms in music. Limb explains that “these parallels between deeper aspects of rhythm and language suggest that rhythm processing might be linked to language mechanisms in the musically trained.”

Coda

I Got Rhythm – George Gershwin (1898-1937)

[played here by the composer in New York, 1931]